Project C5 - Auditory scene analysis with normal hearing listeners and users of cochlear implants

In natural environments multiple sounds often occur simultaneously and they vary dynamically over time. The acoustic information gets intermingled as it spreads in time and space and reflects on objects until it reaches the ear. The auditory system is confronted with this complex signal and must provide mechanisms to analyze it – a problem which is referred to as Auditory Scene Analysis (Bregman, 1994). It does so quite efficiently and successfully in normal hearing persons, as it is generally not a problem to follow a conversation in crowded rooms or in challenging environments. Yet, the underlying principles are still not fully understood (Shamma and Micheyl, 2010; Schwartz et al., 2012; Bremen and Middlebrooks, 2013).

(Bregman, 1994). It does so quite efficiently and successfully in normal hearing persons, as it is generally not a problem to follow a conversation in crowded rooms or in challenging environments. Yet, the underlying principles are still not fully understood (Shamma and Micheyl, 2010; Schwartz et al., 2012; Bremen and Middlebrooks, 2013).

Locating the source of a sound critically depends on the comparison of information from both ears. In healthy listeners localization performance is very precise (~1-2° for central sound sources; Mills, 1958; Seeber, 2002) even in rooms with multiple reflections (Hartmann, 1983; Grantham, 1995; Kerber and Seeber, 2013), because the healthy auditory system is able to perceptually suppress early reflections – a mechanism that is known as the Precedence Effect.

Participating in verbal conversation is an essential part of everyday life and important for social interaction. In deaf people, cochlear implants (CIs) can be used to provide a sense of hearing by

artificially stimulating the auditory nerve. However, the efficiency of the sound coding in CIs appears constrained: while speech understanding in quiet is possible and often good with CIs, CI users have severe difficulties differentiating multiple sound sources and hence are left with a potpourri of superimposed sounds (Seeber and Hafter, 2011; Kerber and Seeber, 2012). The reasons for this lie, amongst other things, in the way cochlear implants currently work: they replace the function of the inner ear by stimulating the hearing nerve with electric pulses. While the hearing nerve has roughly 30,000 nerve fibers to transmit information to the brain, the CI has only a small number of electrodes (usually 12-24) to stimulate them (Wilson and Dorman, 2008). Hence, nerve fibers cannot be stimulated with an individual code. In addition, it is not known when to place an electric pulse to best transmit the fine timing code that exists in the hearing nerve.

Objectives and description of the project

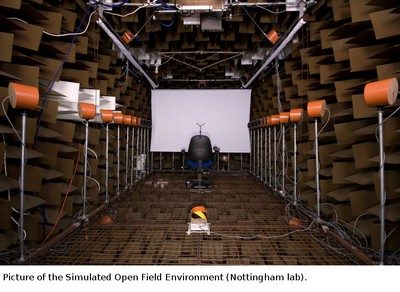

In this project we aim to understand the impact CI coding has on hearing of multiple simultaneous sounds and how to overcome the existing limitations in several different ways. We will investigate the potential of different coding strategies by running experiments with CI users and normal hearing listeners. We aim to find features that improve perception of auditory objects and localization performance in subjects with CIs. For this, acoustic features known to contribute to auditory scene analysis, object segregation and the precedence effect will be varied in a systematic fashion. For our studies we have developed a unique setup which allows us to play multiple sounds from different directions. We can simulate the acoustics of arbitrary rooms in this laboratory by playing artificial reflections of the sound from loudspeakers. Thereby, we can move the listener from one moment to another from a classroom to a cathedral without actually changing positions or scenery – all in controlled laboratory conditions. We call this system the Simulated Open Field Environment(Seeber et al., 2010) and are in the process of building it in our new labs in Munich. While we use the Simulated Open Field Environment to play spatial sounds acoustically to our listeners, we can use the direct stimulation technique with users of cochlear implants to carefully control the electric stimuli delivered by their implants. Special hardware allows us to deliver sounds, i.e. electric pulses, to the patient’s implant under direct control of a computer. We use this to research and test new strategies for CIs before they are implemented in a commercial speech processor.

the potential of different coding strategies by running experiments with CI users and normal hearing listeners. We aim to find features that improve perception of auditory objects and localization performance in subjects with CIs. For this, acoustic features known to contribute to auditory scene analysis, object segregation and the precedence effect will be varied in a systematic fashion. For our studies we have developed a unique setup which allows us to play multiple sounds from different directions. We can simulate the acoustics of arbitrary rooms in this laboratory by playing artificial reflections of the sound from loudspeakers. Thereby, we can move the listener from one moment to another from a classroom to a cathedral without actually changing positions or scenery – all in controlled laboratory conditions. We call this system the Simulated Open Field Environment(Seeber et al., 2010) and are in the process of building it in our new labs in Munich. While we use the Simulated Open Field Environment to play spatial sounds acoustically to our listeners, we can use the direct stimulation technique with users of cochlear implants to carefully control the electric stimuli delivered by their implants. Special hardware allows us to deliver sounds, i.e. electric pulses, to the patient’s implant under direct control of a computer. We use this to research and test new strategies for CIs before they are implemented in a commercial speech processor.

Bregman AS (1994) Auditory scene analysis: The perceptual organization of sound: The MIT Press.

Bremen P, Middlebrooks JC (2013) Weighting of Spatial and Spectro-Temporal Cues for Auditory Scene Analysis by Human Listeners. PLoS ONE 8:e59815.

Grantham DW (1995) Spatial Hearing and Related Phenomena. In: Hearing (Moore BCJ, ed), pp 297-345: Academic Press, San Diego.

Hartmann WM (1983) Localization of sound in rooms. J Acoust Soc Am 74:1380-1391.

Kerber S, Seeber BU (2012) Sound localization in noise by normal-hearing listeners and cochlear implant users. Ear & Hearing 33:445-457.

Kerber S, Seeber BU (2013) Localization in reverberation with cochlear implants: predicting performance from basic psychophysical measures. J Assoc Res Otolaryngol 14:379-392.

Mills AW (1958) On the Minimum Audible Angle. J Acoust Soc Am 30:237-246.

Schwartz A, McDermott JH, Shinn-Cunningham B (2012) Spatial cues alone produce inaccurate sound segregation: the effect of interaural time differences. J Acoust Soc Am 132:357-368.

Seeber B (2002) A New Method for Localization Studies. Acta Acustica - Acustica 88:446-450.

Seeber BU, Hafter ER (2011) Failure of the precedence effect with a noise-band vocoder. J Acoust Soc Am 129:1509-1521.

Seeber BU, Kerber S, Hafter ER (2010) A System to Simulate and Reproduce Audio-Visual Environments for Spatial Hearing Research. Hearing Research 260:1-10.

Shamma SA, Micheyl C (2010) Behind the scenes of auditory perception. Current Opinion in Neurobiology 20:361-366.

Wilson BS, Dorman MF (2008) Cochlear implants: A remarkable past and a brilliant future. Hearing Research 242:3-21.