Project B1 – Learning invariant representations from retinal activity

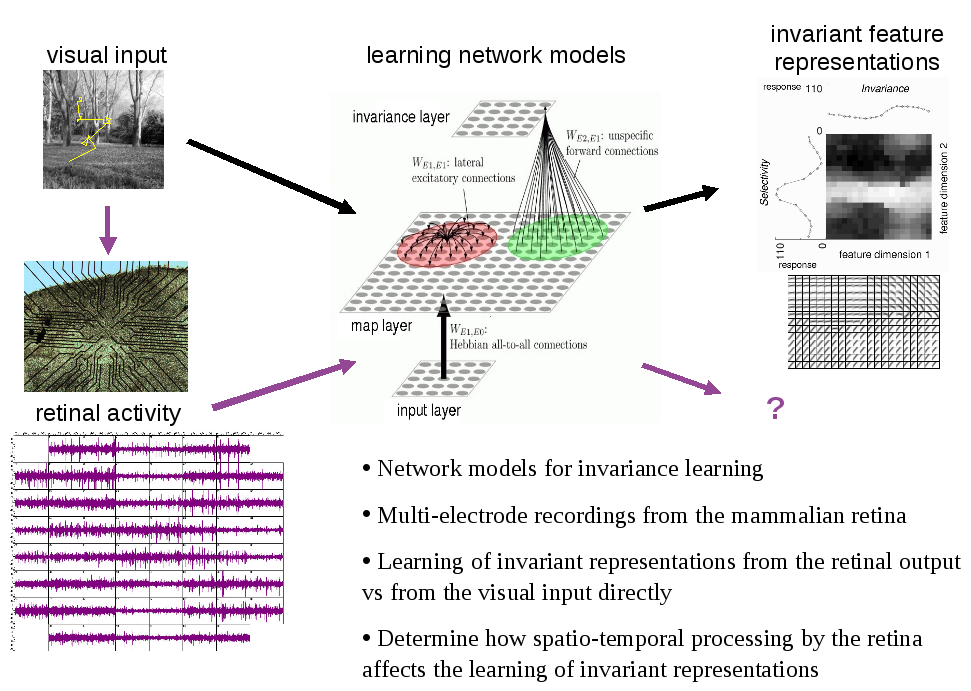

The visual system can recognize objects independent of position or viewing conditions, and it achieves a stable representation of visual space independent of eye movements. How these invariances are acquired by the nervous system is a fundamental question in neuroscience. Computational studies by us [1] and others [2] suggest that invariant representations can be learned by neural mechanisms that exploit the spatio-temporal statistics of the inputs (figure 1). In experimental as well as computational studies we have previously investigated visual processing in the retina [3-5]. In this project we will explore how the spatio-temporal transformation of visual signals by the retina affects the learning of invariant representations.

Objectives and description of work

Combining experimental and computational studies, we will investigate in which way the actual signals that the retina sends to the brain support subsequent representation of spatio-temporal stimulus properties. We will develop and apply models for learning invariant representations from the spatio-temporal structure of the inputs and will compare the representations resulting 1) from the visual inputs directly, 2) from the outputs of models of retinal processing [3,4], and 3) from actual retinal outputs as recorded with multi-array recordings from the isolated vertebrate retina [3]. We will identify the relevant stimulus features and statistical properties that are enhanced or suppressed by the processing in the retina and characterize how this retinal processing affects the subsequent learning of invariant representations of objects and space. We will take into account the specific spatio-temporal dependencies introduced by eye movements in natural vision, and will consider the effects of adaptive mechanisms based on the results of subproject A4. Computational modeling and electrophysiological recordings will be tightly coupled, and both PIs will jointly design and supervise the work, with regular project meetings with all researchers in the project.

[1]: Michler et al. J Neurophysiol 2010. [2]: Einhäuser et al. Biol Cyb 2005. [3]: Gollisch & Meister Science 2008. [4]: Gollisch & Meister Biol Cyb 2008. [5]: Wachtler et al. Journal of Vision 2007.