Project C-T1 - Sensor Fusion in Cortical Circuits: Modeling and Behavioral Experiments in Man and Machine

The approach to instantiating computation and engineering novel computational algorithms has been highly successful, especially for solving problems that can be formalized as a mathematical relation from input to output. However, the problem of extracting more reliable data from ambiguous and noisy real-world environment is still more resistant to straightforward solutions, often requiring elaborate theoretical reasoning and significant processing resources.

The approach to instantiating computation and engineering novel computational algorithms has been highly successful, especially for solving problems that can be formalized as a mathematical relation from input to output. However, the problem of extracting more reliable data from ambiguous and noisy real-world environment is still more resistant to straightforward solutions, often requiring elaborate theoretical reasoning and significant processing resources.

The main computations performed by brain involve nonlinear transformations (e.g. sensory- motor transformations), cue integration, or both [1]. For instance, to reach an object by hand, one must configure the arm joints with respect to the visual location of the object and proprioceptive cues (e.g. position of body or eyes). Accessible sensory information is often uncertain and ambiguous, but humans can perceive and estimate the state of the real world, and consequently handle cognitive tasks efficiently despite the presence of noisy signals. Sensory perception in cortical areas is provided by multiple paths of information targeting uni-modal or multi-modal areas of the brain, which often mutually effect each other. Some simulations of cortically inspired computational frameworks with hand-crafted connectivity have shown how de-noising, inference and sensor perception can possibly be handled by the brain [2]. Recently an unsupervised framework of relation learning between two interacting populations of neurons has been proposed, which allows the network to learn arbitrary relations between two sensory variables [3]. However, a flexible computational framework which could learn relationships between cues rather than using fixed networks still needs to get addressed as a challenge, especially in the presence of higher order modalities [3].

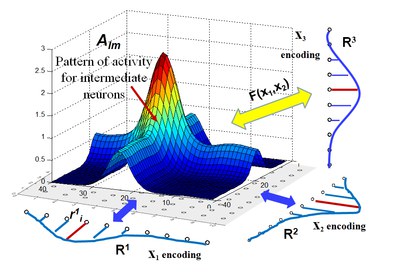

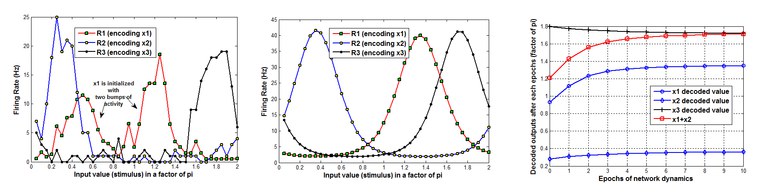

We have suggested a flexible multiple cues integration framework by which the network is capable of learning arbitrary relations between one of the encoded sensory variables as a function of other variables using biologically realistic algorithms like Hebbian learning, Divisive Normalization, and winner-takes-all [4]. The network thereby provides scalable computational machinery for relation satisfaction using attraction dynamics. We demonstrate that after constructing plastic synaptic weights, the network can perform several principle computational tasks such as inference and reasoning, de-noising, cue-integration and decision making. Two important features of this framework are its scalability to cases with higher order of modalities and its flexibility to realize smooth functions of relations.

As prospective direction of this project, we investigate a more generic computational architecture, possibly by cascading simple modules or recurrent networks. This architecture is inspired by the mammalian cortex, where information is provided through multiple paths and processing is performed through interconnected areas, each representing a different type of information about the state of the world. Connections between areas implement relationships and computation occurs by each area trying to be consistent with the areas it is connected to. The system as a whole generates a coherent but distributed representation of the current state of the ambiguous world. As opposed to traditional architectures, where a central processor executes instructions over data stored in memory, we blend information representation with local dedicated processing as the brain does. We will demonstrate the viability of our approach by creating a concrete system that can integrate visual, auditory, and other (e.g. vestibular) input within a network of areas representing such different types of information.

References

[1] A. Pouget, H. Snyder, “Computational approach to sensorimotor transformations”, Nat. Neuroscience, vol.3, Nov 2000, pp. 1192 - 1198.

[2] M. Jazayeri, A. Movshon, “Optimal representation of sensory information by neural populations”, Nat. Neuroscience 9, (2006), pp. 690 - 696.

[3] M. Cook, L. Gugelman, F. Jug, C. Krautz, A. Steger, “Unsupervised learning of relation”, Artificial Neural Networks – ICANN 2010, Volume 6352, 2010, pp. 164 - 173.

[4] M. Firouzi, C. Axenie, S. Glasauer, J. Conradt, “A Flexible Framework for Cue Integration by Line Attraction Dynamics and Divisive Normalization“, BCCN Sparks workshop, Munich, 2-4 Dec 2013.